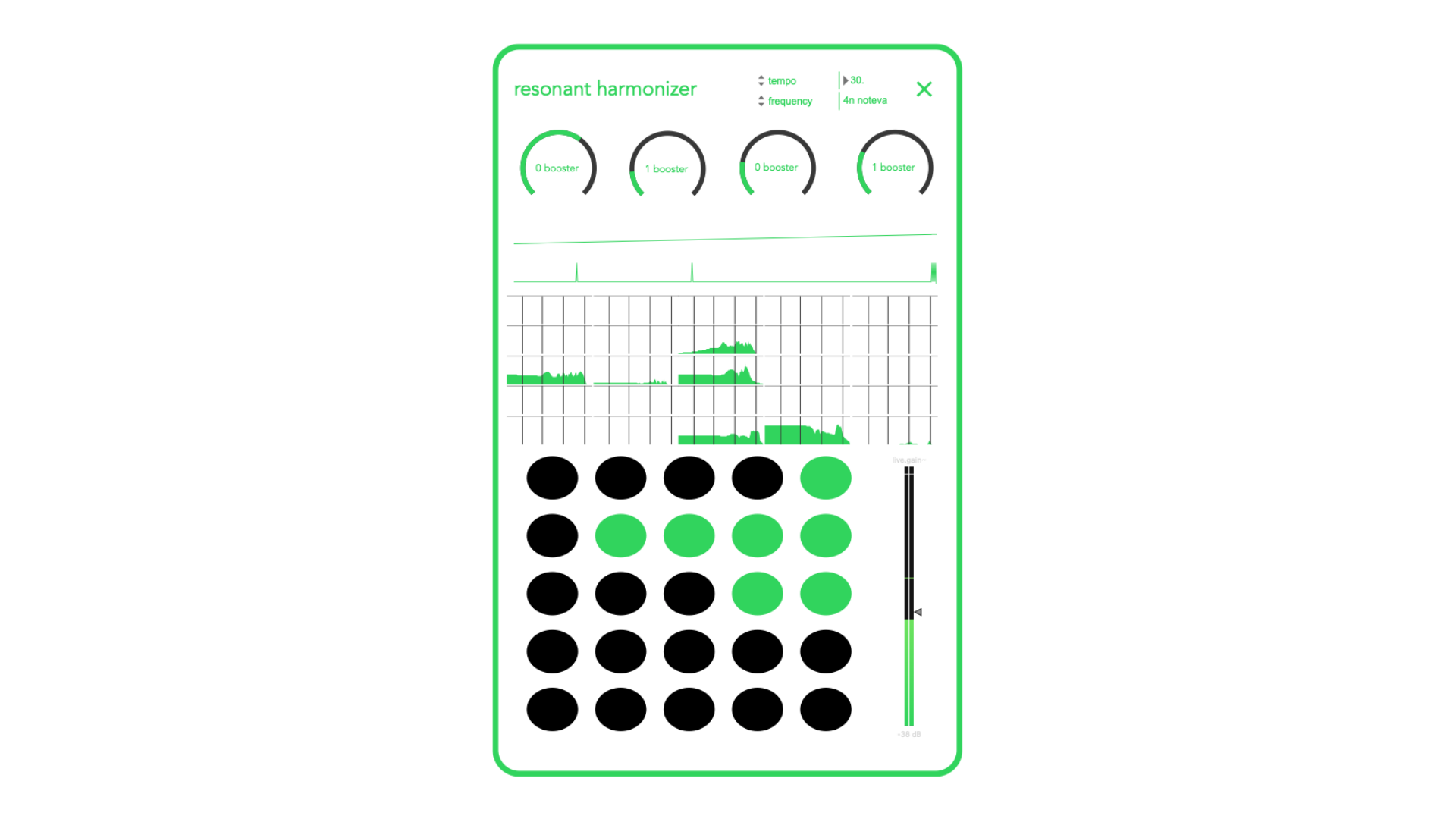

Resonant Harmonizer

Max Devices

Interface details and download options coming soon.

Touch screen interfaces have revolutionized how we interact with digital devices. Modern capacitive touchscreens can detect multiple simultaneous touch points, enabling complex gestures like pinch-to-zoom, rotation, and multi-finger scrolling. The technology works by measuring changes in electrical charge when a conductive object (like a finger) touches the screen surface. Advanced touch interfaces incorporate haptic feedback systems that provide tactile responses to user actions, creating a more immersive and intuitive experience. These systems can simulate the feeling of pressing physical buttons, provide texture sensations, or give directional feedback for navigation. The evolution from resistive to capacitive to more recent technologies like force-sensitive touchscreens has enabled new interaction paradigms. Force touch can differentiate between light taps and firm presses, unlocking contextual menus and shortcuts. Future developments in touch technology include ultrasonic fingerprint sensors integrated directly into displays, allowing secure authentication without dedicated hardware buttons. Additionally, researchers are working on touchscreens that can display tactile textures, potentially allowing users to "feel" different materials and surfaces through the screen. These interfaces must be designed with accessibility in mind, supporting voice commands, larger touch targets for users with motor difficulties, and compatibility with assistive technologies. The best touch interfaces feel natural and responsive, making the technology invisible to the user experience.

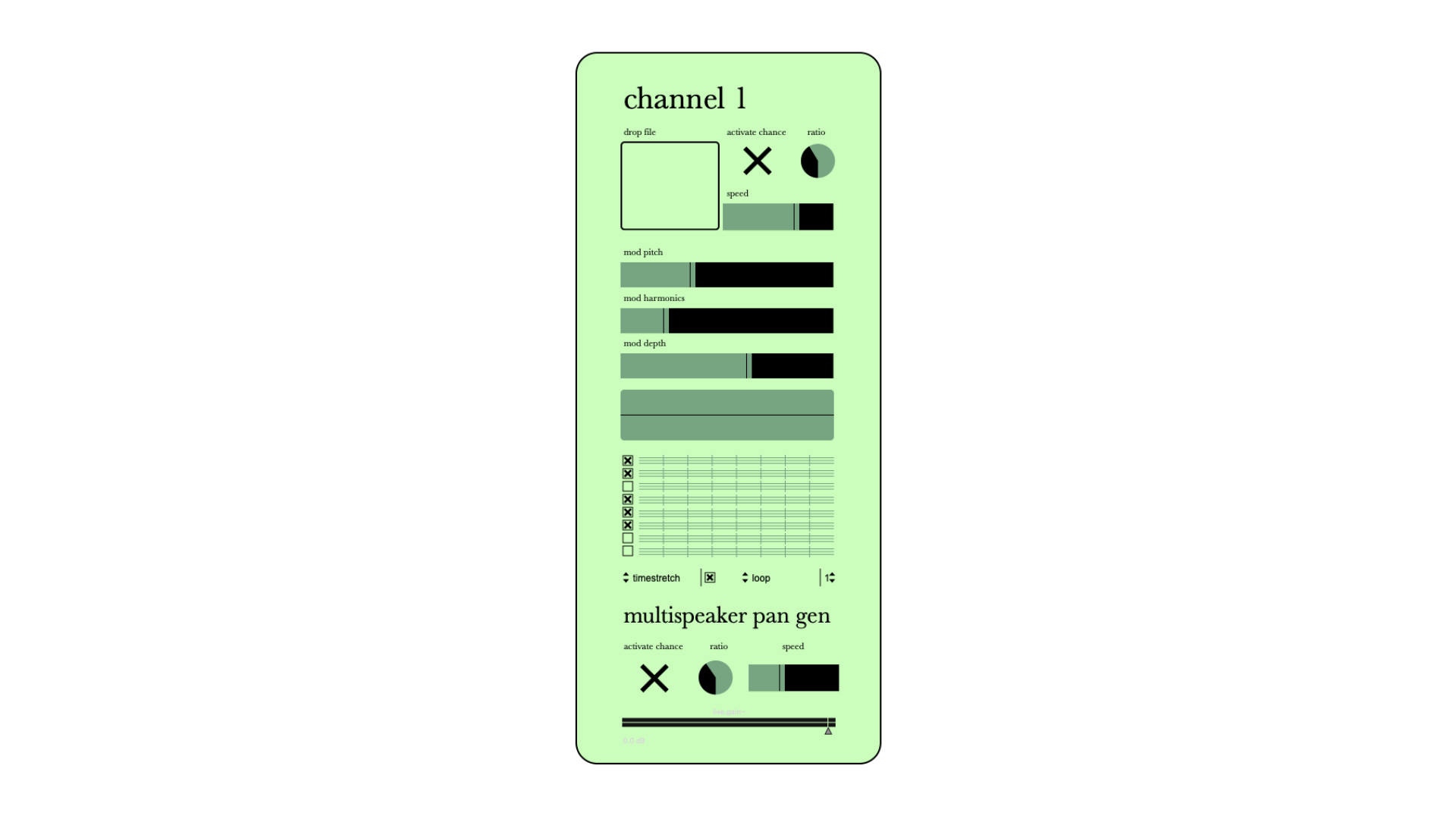

Multichannel Samples

Max Device

Interface details and download options coming soon.

Data dashboards serve as the command center for modern business intelligence, transforming raw data into actionable insights through sophisticated visualization techniques. These interfaces aggregate information from multiple sources, presenting complex datasets in digestible formats that enable quick decision-making. Effective dashboard design follows the principle of progressive disclosure, showing high-level KPIs at first glance while allowing users to drill down into detailed analytics. Interactive elements like filters, date ranges, and comparative views let users explore data from different perspectives without overwhelming the interface. Real-time data streaming capabilities ensure that dashboards reflect the current state of business operations. This is particularly crucial for monitoring systems, financial trading platforms, and operational control centers where split-second decisions can have significant impact. Modern dashboards leverage machine learning to provide predictive analytics, identifying trends and anomalies that might not be immediately apparent to human observers. These systems can automatically highlight unusual patterns, forecast future performance, and suggest optimization strategies. Customization is key to dashboard effectiveness - different roles within an organization need different views of the same underlying data. A sales manager might focus on revenue metrics and pipeline health, while an operations manager needs visibility into production efficiency and resource utilization. The best dashboards balance information density with clarity, using color coding, typography, and layout to guide attention to the most critical metrics while maintaining visual hierarchy and avoiding cognitive overload.

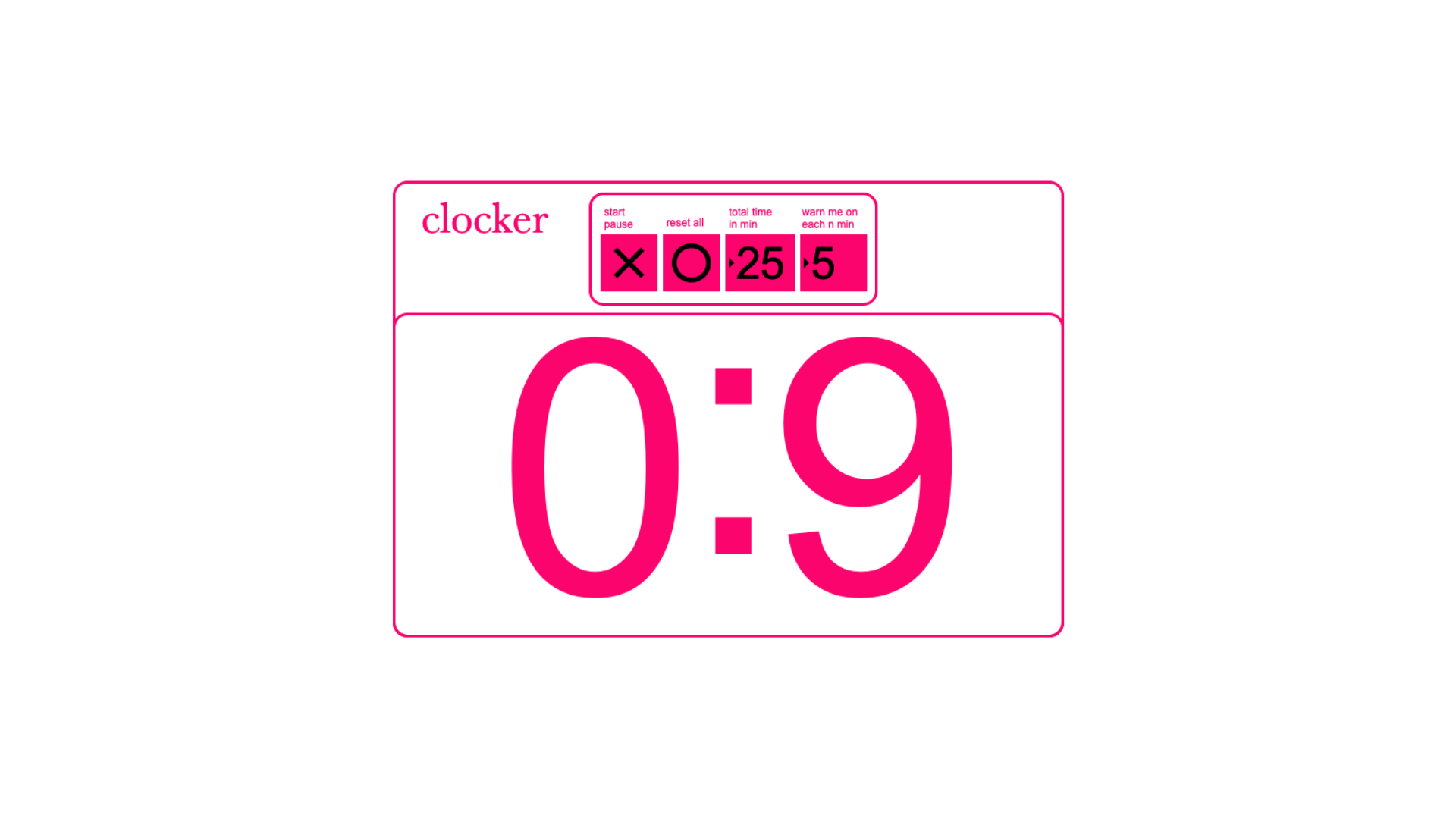

Clocker

Max Devices

Interface details and download options coming soon.

Voice control interfaces represent one of the most natural forms of human-computer interaction, leveraging our innate ability to communicate through speech. These systems combine advanced speech recognition, natural language processing, and contextual understanding to create seamless conversational experiences. Modern voice interfaces use neural networks trained on massive datasets to understand not just individual words, but intent, context, and nuance. They can handle accents, background noise, and conversational speech patterns that would have been impossible for earlier rule-based systems to process. The challenge in voice interface design lies in managing user expectations and handling the inherent ambiguity of natural language. Users often speak to voice systems as they would to humans, expecting understanding of implied context, follow-up questions, and even emotional undertones. Privacy and security considerations are paramount in voice interface design. Always-listening devices must balance convenience with user privacy, implementing wake words, local processing where possible, and clear data handling policies. Multimodal integration is becoming increasingly important, where voice commands are combined with visual feedback, gesture recognition, or traditional input methods. This creates more robust interaction models that can adapt to different contexts and user preferences. Voice interfaces excel in hands-free scenarios - while driving, cooking, or when accessibility needs make traditional input methods challenging. The most successful implementations focus on specific use cases where voice provides clear advantages over other interaction methods. Future developments include emotion recognition, allowing systems to respond appropriately to user mood and stress levels, and improved contextual memory that enables more natural, ongoing conversations rather than isolated command-response interactions.

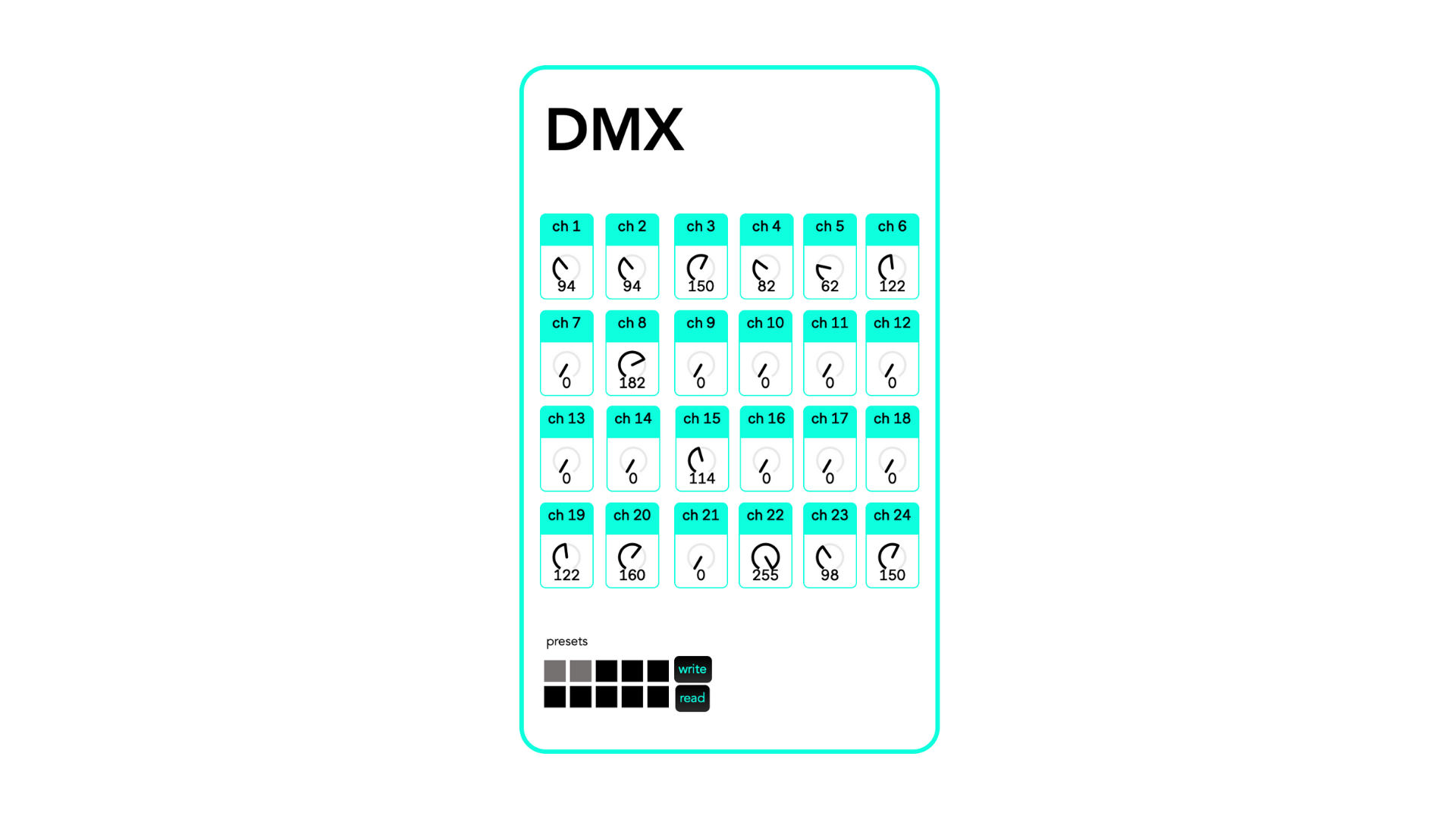

DMX Router

M4L Devices

Interface details and download options coming soon.

Voice control interfaces represent one of the most natural forms of human-computer interaction, leveraging our innate ability to communicate through speech. These systems combine advanced speech recognition, natural language processing, and contextual understanding to create seamless conversational experiences. Modern voice interfaces use neural networks trained on massive datasets to understand not just individual words, but intent, context, and nuance. They can handle accents, background noise, and conversational speech patterns that would have been impossible for earlier rule-based systems to process. The challenge in voice interface design lies in managing user expectations and handling the inherent ambiguity of natural language. Users often speak to voice systems as they would to humans, expecting understanding of implied context, follow-up questions, and even emotional undertones. Privacy and security considerations are paramount in voice interface design. Always-listening devices must balance convenience with user privacy, implementing wake words, local processing where possible, and clear data handling policies. Multimodal integration is becoming increasingly important, where voice commands are combined with visual feedback, gesture recognition, or traditional input methods. This creates more robust interaction models that can adapt to different contexts and user preferences. Voice interfaces excel in hands-free scenarios - while driving, cooking, or when accessibility needs make traditional input methods challenging. The most successful implementations focus on specific use cases where voice provides clear advantages over other interaction methods. Future developments include emotion recognition, allowing systems to respond appropriately to user mood and stress levels, and improved contextual memory that enables more natural, ongoing conversations rather than isolated command-response interactions.